This article is more than 1 year old

Beware! Medical AI systems are easy targets for fraud and error

You can fake diagnoses with adversarial examples

Medical AI systems are particularly vulnerable to attacks and have been overlooked in security research, a new study suggests.

Researchers from Harvard University believe they have demonstrated the first examples of how medical systems can be manipulated in a paper [PDF] published on arXiv. Sam Finlayson, lead author of the study, and his colleagues Andrew Beam and Isaac Kohane, used the projected gradient descent (PGD) attack on image recognition models to try and get them to see things that aren' t there.

The PGD algorithm finds the best pixels to fudge in an image to create adversarial examples that will push models into identifying an object incorrectly and thus cause false diagnoses.

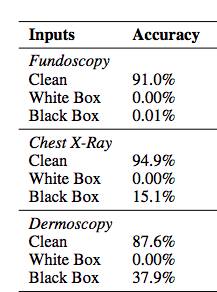

The team tested the attack on three data processes: A fundoscopy model which detects diabetic retinopathy from retina scans, a second model that scans chest x-rays for signs of a collapsed lung and finally a dermoscopy model looking at moles for signs of skin cancer.

To increase the scope of testing the team used two techniques. Firstly a white box attack, which assumes that the hacker has all the resources to understand how the model works, then a black box attack that works on the principle that the miscreant does not have access.

After the PGD algorithm is applied, the very high accuracy levels for all three models plummet to zero for all white box attacks, and drop by more than 60 per cent for black box attacks.

Accuracy levels of three different image classification models before and after white box and black box PGD attacks.

Finlayson and Beam explained to The Register, that the PDG attacks runs through several iterations that makes tiny adjustments to the modified image. These are unnoticeable to us but fool the AI system into thinking it's seeing something that isn't there.

“What is remarkable and surprising is that the process can result in changes that are so small that they are invisible to human eyes, yet the neural network thinks that the picture now contains something different entirely.”

AIs - smart and incredibly foolish

It’s well known that image recognition models can be easily fooled. Look at this adversarial sticker that dupes machines into thinking a banana is actually a toaster. In more practical settings, it means that signs can be misread by self-driving cars or faces can be warped for facial recognition systems.

Medicine, however, “may be uniquely susceptible to adversarial attacks, both in terms of monetary incentives and technical vulnerability,” the paper argues.

It’s probably easier to fool these systems too. The majority of the best-performing image classifiers are built from open source models like ImageNet. It means that adversaries will have a good idea of how these systems work, and are more likely to successfully attack other AI models.

It’s unknown how much deep learning expertise medical practitioners will have in the future as AI gets rolled out in clinical settings. But at the moment these adversarial attacks are mainly just a research curiosity, said Finlayson and Beam.

“You need to have an understanding of mathematics and neural networks so that you can do the correct calculations to craft the adversarial example," they explained. "However, the entire process could be easily automated and commotiditized in an app or website that would allow non-experts to easily use these techniques”.

They hope that this will encourage more research into adversarial machine learning in healthcare to discover any potential infrastructural defenses that can be deployed so that the technology can be used more safely in the future. ®